Why we started R4D’s evaluation & adaptive learning practice

A little over seven years ago, I was with a trusted colleague at lunch and an idea struck. The only thing I had to jot it down on was a napkin. But somehow that felt fitting. In the moment, I felt a fleeting rush: lots of wild and innovative ideas started on a napkin, right? Am I on to something?

I lost the napkin and forget the details — but the ideas formed the core of a new R4D Evaluation & Adaptive Learning practice that we built, in fits and starts, over the ensuing years.

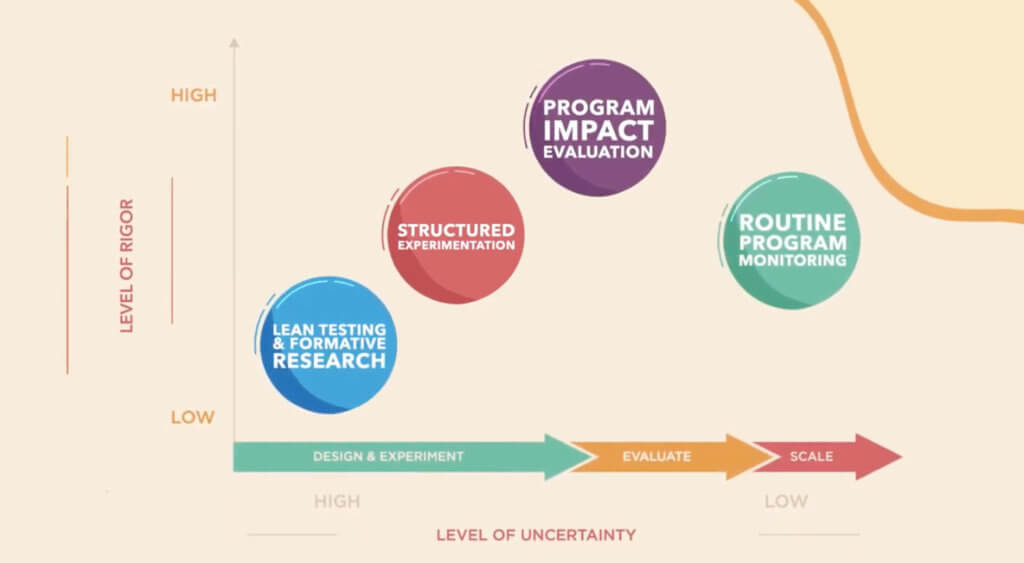

The underlying conviction was that we needed to rethink why and how we did monitoring and evaluation (M&E) work. The development community had taken a huge step forward in the proceeding years as impact evaluation and RCTs had taken hold (capped by this week’s exciting Nobel Prize for economics announcement). But RCTs, valuable as they are, didn’t always fill the learning gaps we were seeing. We needed to think more about how evidence and feedback could advance learning international development community. Our theories of change for how evidence leads to impact needed a reboot.

If we wanted to influence practice, we needed to bring a researcher’s mindset to a practitioner’s problems.”

What if we built a team that could work with partners throughout the project cycle, helping them generate feedback earlier in that cycle? This approach would enable projects to fail faster, and use data and experimentation to iterate their way toward programs that were more likely to achieve impact (and could then be rigorously evaluated to confirm this).

It didn’t take long to realize that my thinking wasn’t all that original. The ideas I was drawing on were in the zeitgeist and part of a larger conversation that lots of people were having in different corners of global development (see here, here, here, and here).

The excitement of these ideas came with a sense there was still a gap — a need to put them into practice — and that R4D was a good place to try, probably the right kind of organization to be doing some of this work. An entrepreneurial organization where we could collaborate with researchers — but where our primary motivation was improving practice, and answering questions that were linked to real, on-the-ground strategies and decisions. One with smart, capable colleagues committed to getting things right and getting things done. A place not pre-committed with one set of evaluation or research methods, but where we could try to take the best of all them.

That moment still sticks with me as a beginning of sorts — the start of journey that I’ve been on since then with many (trusted, brilliant and good human) fellow travelers both inside and outside of R4D.

Our journey has involved riding the wave of a paradigm shift in the evaluation and learning space — and hopefully contributing to it in meaningful ways as well.

Today we’re launching an explainer video to continue contributing to this conversation.

Poverty, poor health, low quality of education and undernutrition are all tough, wicked problems. Uncertainty about the best way forward is the only thing that’s certain.

If we can acknowledge and embrace the uncertainty, use feedback to replace some of the uncertainty with new knowledge, and iterate and adapt, we might collectively get closer to our goals.”

But while many share these principles and mindset, we still run in to practitioners, funders, and evaluators who still ask something like the following:

“When resources — time, effort, funding, expertise — are limited, where should we start? How can we get technical complexity and rigor right, and learn as much as possible?”

This video offers our framework for doing so. We hope it’s useful for practitioners, funders, evaluators and researchers alike, to think about the range of tools and feedback methods at their disposal.

When applied, we hope it can help shift the dialogue toward a new, emerging mindset: Instead of trying to prove “what works,” and replicate “success,” what if we truly embraced the uncertainty inherent in tackling wicked problems in different contexts? What if we shifted some of our evaluation resources to generate feedback much earlier in the program cycle, when programs often hit their first points of failure? Could we do a better job improving programs, saving expensive impact evaluation evaluations for programs that are as well-designed — through these same feedback and iteration loops — as possible?

Like much of our work, the video was truly a team effort and builds on the work of many colleagues. In particular, special thanks to the Evaluation and Adaptive Learning team and R4D Communications team for making it happen.